Sir Isaac Newton revolutionized science and enriched our lives with the laws of motion. Here are similar laws that are as crucial as Newton’s laws which we all “software people” need to be aware of. You might have come across these laws/principles in bits and pieces. My attempt here is to compile them together and describe in the context of the IT/software industry.

The Peter Principle

In a hierarchy, every employee tends to rise to his level of incompetence.

Laurence J. Peter

Peter’s Principle is a concept in management developed by Laurence J. Peter. It says that people in an organizational hierarchy tend to rise to “a level of respective incompetence” eventually. Employees are promoted based on their success in previous jobs until they reach a level at which they are no longer competent, as skills in one job do not translate well to another.

For example, a very skilled software engineer (individual contributor) who excels in a specific programming language might be promoted to a manager position. While great at engineering skills, if he/she lacks the necessary leadership and communications skills, his/her performance might decline in the new role.

Another example could be promoting developers to architect roles based on their coding skills, without considering their design and articulation skills. If someone is an excellent developer, the same person not necessarily would be a good architect.

Moore’s law

Number of transistors in an integrated circuit (IC) doubles approximately every two years.

Gordeon Moore

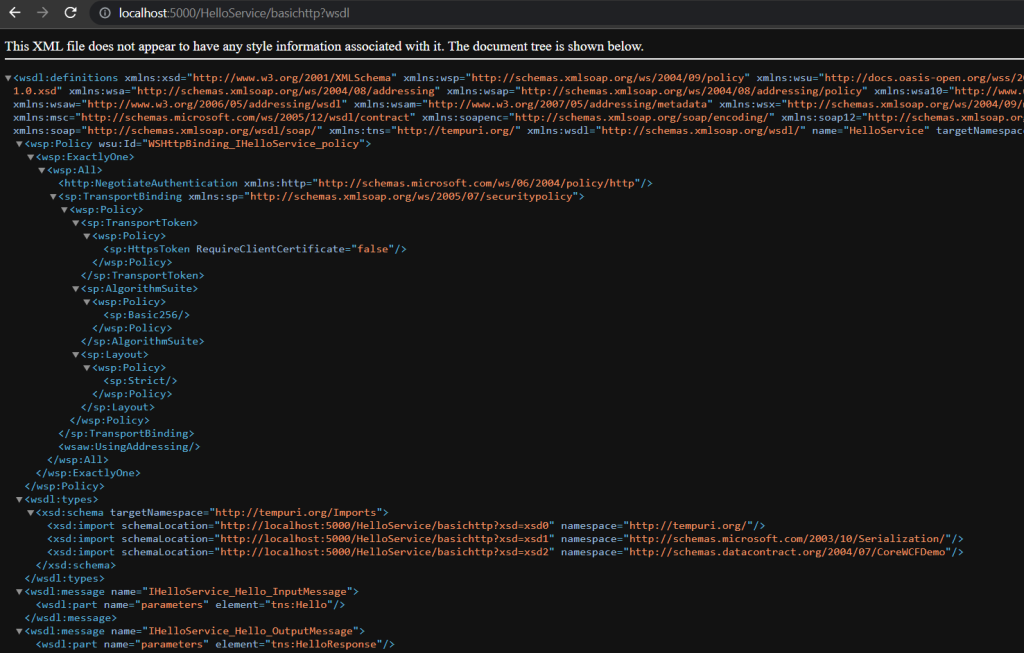

It was named after Gordon Moore who was a co-founder of Fairchild Semiconductor. It’s not a law of physics, but a long-term trend in technology evolution and as you can see in the graph below, it checks out.

As the number of transistors increases, the cost of computing decreases. This makes high-performance computing more accessible, enabling more businesses to leverage advanced software solutions. The big leap in IoT, cloud, and AI we are witnessing is attributed to the amount of transistors (computing power) that are packed in smaller area.

Count of transistors -> smaller chip size -> cheaper it gets -> smarter/faster compute

Note that while Moore’s Law has held true for more than half a century, it faces considerable challenges as transistor sizes approach atomic scales so it can not decrease further.

Conway’s Law

Organizations that design systems are constrained to produce designs that are copies of their own communication structures.

Melvin Conway

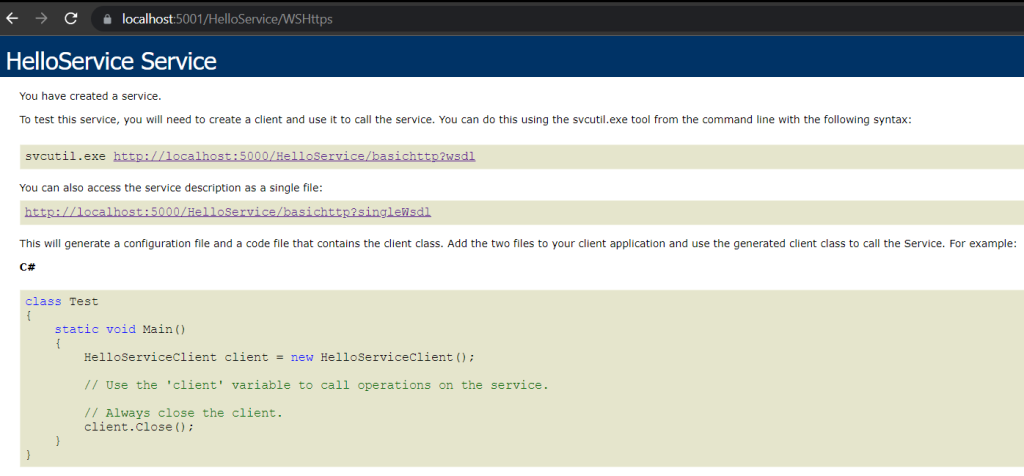

Conway’s Law establishes the link between the composition of organizations and the systems designed by those organizations. It was introduced by Melvin Conway in 1967.

Conway’s Law states that the architecture/ design of a system typically reflects the organization of the team that built it. This is because, for a product to work, everybody involved in its design needs to communicate with each other to ensure compatibility across the board. So by the time the product is eventually built, it is nothing but a copy of the organization structure/ hierarchy of the communication structure adopted by that company.

Considering Conway’s Law can help companies make informed decisions about team structures and communication strategies, ultimately leading to more effective architectures. And there is no perfect answer to that. Like many other questions, the answer is “it depends”. Lately, companies seem to organize their workforces in smaller/leaner teams to ensure agility in their delivery process.

As shown in the picture above, organizations that consist of small, lean and distributed teams end up designing their software modular and distributed. Organizations having large teams tend to implement monolithic architectures.

Murphy’s Law

Anything that can go wrong will go wrong (at the worst possible time).

Edward Murphy Jr.

It was named after American aerospace engineer Edward A. Murphy Jr.

In the context of the software industry, Murphy’s Law underlines the importance of anticipating potential problems and building resilient systems. For instance, engineers build error handling and retry mechanisms to ensure that a single error does not result in complete system failure. Engineers designing complex machines or electronics create redundancies in critical components to safeguard against malfunction.

If there’s a bug in the code, it will eventually get you, often at the most inconvenient time. If there’s any chance of data loss, it is bound to happen! If there is a security flaw in your code, it will be misused. This emphasizes the importance of quality assurance, regular backups and robust data recovery strategies. The purpose of Murphy’s Law is not to be negative, but rather to highlight the importance of planning and testing to prevent mistakes.

Murphy’s law highlights one key point: computers do what you tell them to do, not what you want them to do.

Wrapping up

It’s fun to know these laws/ principles that apply to all of us and unknowingly or knowingly affect our lives. Know it, practice it, share it.